♟ Tactics for better results

The basics can get you pretty far with prompt engineering. However, you can use a few tactics to get results that are way better. These work well with multi step reasoning problems, like word problems, common sense situations and strategy questions.

If you're interested in Prompt Engineering and want a more technical deep dive, check out Learn Prompting.

Other readers enjoyed - Prompt Process

Write better prompts, faster

Chain of Thought

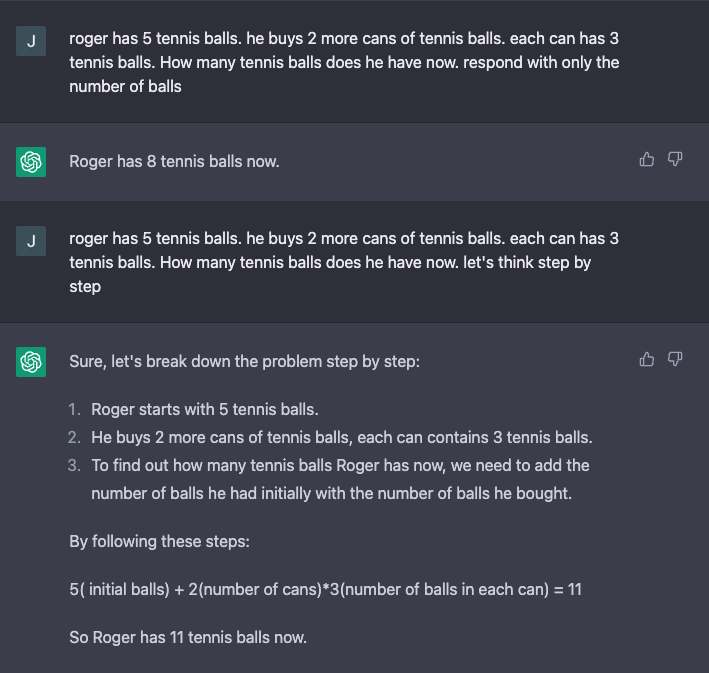

Chain of thought is a method that helps Language Models understand how to think. Large Language models struggle with multi-step reasoning. To get past this limitation, we give step by step examples rather than questions and answers.

This strategy can solve a complicated reasoning tasks, like; word problems, common sense situations, and understanding data. Chain of Thought prompts improve performance to almost human levels of skill.

Chain of Thought allows us to decompose tasks, and create a more predictable and correct output. This processs works best with Large Language models, like GPT (Chat included), and PaLM (Googles model).

Chain of Thought offers the AI some idea of how it can tackle the problem at hand.

Let's think step by step

This has proven itself to be the most powerful prompts for language models. Like a second grader rushing through a math problem, the models struggle when they rush.

A great solution is instruct the machine to slow down its thought process. You can do this with the command Let's think step by step at the end of a prompt. This command indicates to the model that it needs to break the problem down into its component pieces to keep moving

As you can see in the Chat-GPT image above, ChatGPT gets the answer wrong when it doesn't think. However, when it thinks step by step, the answer is correctly.

Telling the model to go slow allows it to generate a chain of thought on its own. This is useful, when you want to we want to solve multistep problems, and can't generate examples.

Try these out, when you can't get a solid reproducable answer, or the answer you get is wrong.

Fine Tuning

Fine-tuning is the process of training a pre-trained machine learning model on a new dataset. Fine-tuning adapts the model to a specific task or domain. It adjusts the model's parameters so that it can perform well on a new dataset, based on domain specific data. This is a more technical and complex route to solving a problem,, but provides better results.

Fine Tuning lets you create models that sound a certain way. For example, to make a bot with a tone like an Obama Speech you would; create a file of all Obama speeches, and upload them to the fine tuning API. You need a few hundred sentences, but you could then have a model that sounds eerily like the former president.

Learn Prompting

If you want to learn more about Prompting, check out Learn Prompting. They cover the basics through quite sophisticated ideas in prompting. The people who run it are always updating the site, and are at the cutting edge of prompt engineering.